This is an interesting and moving read. A tribute to the late Dennis Ritchie delivered at Dennis Ritchie Day at Bell Labs, Murray Hill, NJ, September 7, 2012

Author Archives: Graham Douglas

The Mysterious etaoin shrdlu

I just love this story, from a preview trailer of Linotype: The Film.

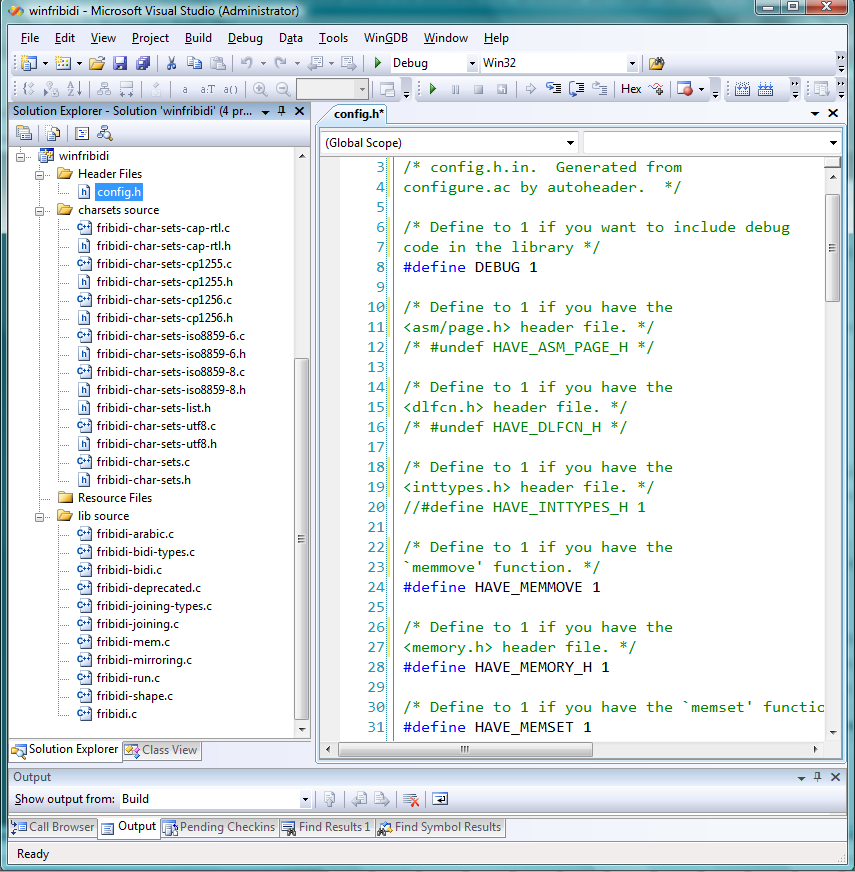

One way to compile GNU Fribidi as a static library (.lib) using Visual Studio

Introduction and caveat reader

Yesterday I spent about half an hour seeing if I could get GNU Fribidi C library (version 0.19.2) to build as a static library (.lib) under Windows, using Visual Studio. Well, I cheated a bit and used my MinGW/MSYS install (which I use to build LuaTeX) in order to create the config.h header. However, it built OK so I thought I’d share what I did; but do please be aware that I’ve not yet fully tested the .lib I built so use these notes with care. I merely provide them as a starting point.

config.h

If you’ve ever used MinGW/MSYS or Linux build tools you’ll know that config.h is a header file created through the standard Linux-based build process. In essence, config.h sets a number of #defines based on your MinGW/MSYS build environment: you need to transfer the resulting config.h to include it within your Visual Studio project. However, the point to note is that the config.h generated by the MinGW/MSYS build process may create #defines which “switch on” certain headers etc that are “not available” to your Visual Studio setup. What I do is comment out a few of the config.h #defines to get a set that works. This is a bit kludgy, but to date it has usually worked out for me. If you don’t have MinGW/MSYS installed, you can download the config.h I generated and tweaked. Again, I make no guarantees it’ll work for you.

An important Preprocessor Definition

Within the Preprocessor Definitions options of your Visual Studio project you need to add one called HAVE_CONFIG_H which basically enables the use of config.h.

Two minor changes to the source code

Because I’m building a static library (.lib) I made two tiny edits to the source code. Again, there are better ways to do this properly. The change is to the definition of FRIBIDI_ENTRY. Within common.h and fribidi-common.h there are tests for WIN32 which end up setting:

#define FRIBIDI_ENTRY __declspec(dllexport)

For example, in common.h

...

#if (defined(WIN32)) || (defined(_WIN32_WCE))

#define FRIBIDI_ENTRY __declspec(dllexport)

#endif /* WIN32 */

...

I edited this to

#if (defined(WIN32)) || (defined(_WIN32_WCE))

#define FRIBIDI_ENTRY

#endif /* WIN32 */

i.e., remove the __declspec(dllexport). Similarly in fribidi-common.h.

One more setting

Within fribidi-config.h I ensured that the FRIBIDI_CHARSETS was set to 1:

#define FRIBIDI_CHARSETS 1

And finally

You simply need to create a new static library project and make sure that all the relevant include paths are set correctly and then try the edits and settings suggested above to see if they work for you. Here is a screenshot of my project showing the C code files I added to the project. The C files are included in the …\charset and …\lib folders of the C source distribution.

With the above steps the library built with just 2 level 4 compiler warnings (that is, after I had included the _CRT_SECURE_NO_WARNINGS directive to disable deprecation). I hope these notes are useful, but do please note that I have not thoroughly tested the resulting .lib file so please be sure that you perform your own due diligence.

A few recollections from my freelance days

A slight departure from the usual posts on this blog 🙂

In the early 1990s, before large-scale offshoring came into play, I started working freelance, editing and typesetting technical books using Windows-based software (FrameMaker, before Adobe bought it) and certainly prior to PDF as the only way to transfer files to printers and “film bureaux”. Film, remember that? Positive/negative, right/wrong reading emulsion side down… Printers and bureaux were dominated by the Macintosh (maybe they still are) so without the common use of PDF, transferring Windows-generated typeset material/files to commercial printers was, at times, a bit of a nightmare. I was working for a number of big book publishers who all used different printers, each with their own requirements for accepting electronic files which inevitably meant PostScript if you were working on a PC.

I still have vivid memories of generating and shipping hundreds of megabytes of raw PostScript code using SyQuest drives: then the only “high capacity” removable media accepted by printers. Ubiquitous, low cost, high-speed electronic transfer of huge amounts of data was still in the future, unless you had ISDN, which I didn’t and couldn’t afford. My first forays into the online world was the Bulletin Board and CompuServe and I was the proud owner of a US Robotics Sportster 14,400 Fax modem. I remember the joys of the Hayes command set, 7 or 8 bit data, odd or even parity and all the weird arcania of comms technology of the time. Enough already, too many memories!

Incidentally, a single 88 MB SyQuest disk cost (from memory) around £60 in the early to mid 1990s! I confess that I hated using SyQuest drives because you could never be sure that a disk formatted for the PC could be mounted (i.e., opened) on a Macintosh at the printers due to disk formatting issues. After generating 500MB of PostSript data and couriering it across the Atlantic to meet a deadline you don’t want to hear that your disks can’t be read. The introduction of the Iomega ZIP drive was a blessing and wiped out SyQuest’s market, virtually overnight. Whenever I recall SyQuest drives I cannot help but think of the Trabant. Yes I should have used a Mac, maybe, but the vast majority of Word files (for technical books) I received from publishers were generated on a PC: in an era when transferring Word files between Mac to Windows was not always a “joy” and cleaning up the “converted” Word files could be a lot of work.

Generating reliable PostScript code via the Windows 3.1 PostScript printer driver was an excercise in the darkest arts and the main reason I had to become, at the time, expert in PostScript programming: to understand what was going on and how all those bizarre options in the print dialog box affected the PostScript code. Page independence, VM memory in the printer and a host of other settings which made the difference in getting the PostScript to RIP successfully, or not. I recall the “font wars” of TrueType vs Adobe Type 1 font file formats: “Type 1 rules, TrueType sucks” was an oft-quoted mantra of the times and certainly the conversion of TrueType fonts for inclusion in the PostScript data stream was not always without hassles…

Anyway, enough of this. Monty Python Yorkshire Man sketch, anybody?

100 video clips of an interview with Donald Knuth

It’s amazing what you find: here’s nearly 100 video clips of an interview with Donald Knuth, from around 2 to 7 minutes each. http://www.webofstories.com/play/17060?o=FHP

Full getopt Port for Unicode and Multibyte Microsoft Visual C, C++, or MFC Projects

If you have ever tried to port Linux apps to Windows you may find this useful:

http://www.codeproject.com/Articles/157001/Full-getopt-Port-for-Unicode-and-Multibyte-Microso

From the codeproject site: “This software was written after hours of searching for a robust Microsoft C and C++ implementation of getopt, which led to devoid results. This software is a modification of the Free Software Foundation, Inc. getopt library for parsing command line arguments and its purpose is to provide a Microsoft Visual C friendly derivative.”